You certainly didn’t miss yesterday’s announcement of Alexa Conversations at re:MARS 2019, but I think this is a great place to talk about it in some more detail.

I’ll start off by reviewing some of what we know up to this point, and then I am looking forward to hearing your thoughts on it - Speculations, thoughts on possible use cases and criticism are explicitly welcome!

So, here’s how Rohit Prasad, VP and Head Scientist of Alexa AI, presented Alexa Conversations:

So, here’s how Rohit Prasad, VP and Head Scientist of Alexa AI, presented Alexa Conversations:

The key to making Alexa useful for our customers is to make it more natural to discover and use her functionality.

To provide more utility we envision a world where customers will converse naturally with Alexa: seamlessly transitioning between topics, asking questions, making choices, and speaking the same way that you would with a friend, or family member.

Today, I am excited to announce the private preview of Alexa Conversations, a deep learning-based approach for creating natural voice experiences on Alexa with less effort, fewer lines of code, and less training data than ever before. Hand coding of the dialog flow is replaced by a recurrent neural network that automatically models the dialog flow from developer provided input. With Alexa Conversations, it’s easier for developers to construct dialogue flows for their skills.

Source: Transcription of the presentation, 9:48am - 9:50 am

So, that seems to be Alexa Conversations in the narrow sense: A tool to build Skills by training an AI on conversations, instead of manually coding the flow of the conversation. Before we move on, let’s look at this in some more detail.

The Alexa Developer Blog post gets into some more depth:

The Alexa Developer Blog post gets into some more depth:

Alexa Conversations combines an AI-driven dialog manager with an advanced dialog simulation engine that automatically generates synthetic training data. You provide API(s), annotated sample dialogs that include the prompts that you want Alexa to say to the customer, and the actions you expect the customer to take. Alexa Conversations uses this information to generate dialog flows and variations, learning the large number of paths that the dialogs could take.

So, it sounds to me like this brings an architectural change in how voice apps are built: So far Alexa Skills consist of the two parts NLU (to which the developer contributes the language model) and conversation + fulfillment logic (provided entirely by the developer). Apparently Alexa Conversations separate the conversation (structure) logic from the fulfillment (content) logic, with only the latter being provided entirely by the developer, and the conversation logic being trained in the similar way to the language model? Dialog Management, which has been around for a while and which is propagated by Alexa developers as best practice, is already one step into that direction.

The blog article continues:

The blog article continues:

In the past, developers scripted every potential turn, built an interaction model, managed dialog rules, wrote back-end business logic, and analyzed logs to test and iterate. […]

Now, you provide dialog samples and Alexa Conversations predictively models the dialog path using a deep, recurrent neural network. At runtime this neural network takes the entire session’s dialog history into account and predicts the optimal next action or step in the dialog […].

So far it kind of sounds similar to Dialog Management, but this is where it gets promising:

So far it kind of sounds similar to Dialog Management, but this is where it gets promising:

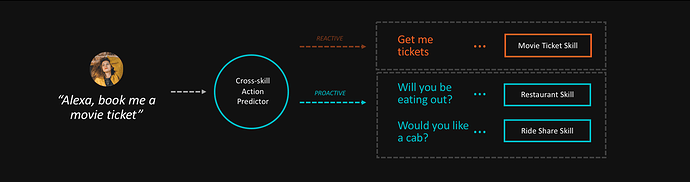

It [the neural network] is trained to interpret dialog context in order to handle multiple user workflows, accommodate natural user input (like out-of-sequence information or corrections), address common business transaction errors, and proactively recommend additional API functionality.

So, the promise is that it can handle user input far beyond the happy path, even up to error cases.

And all of that while enabling the developer to focus on the fulfillment:

And all of that while enabling the developer to focus on the fulfillment:

For example, the Atom Tickets skill used 5,500 lines of code and nearly 800 training examples.

The Atom Tickets skill built with Alexa Conversations shrank almost 70%, to just 1,700 lines or code, and needed only 13 customer dialog samples.

So, it sounds like something big upcoming, right? If you want to participate in Amazon’s developer preview, you can apply here.

Of course this is only Alexa Conversations in the narrow sense, and I will soon add another post with the bigger picture. But I’m already curious to hear your thoughts on this… Are you optimistic about the capabilities of conversational AI to model you Skill’s conversational structure? Do you think it will make sense for some Skills (maybe what Vasili from Invocable described as integrations: "the brain of this voice app is somewhere else — in a restaurant’s CRM, Uber’s backend, or NYT’s content management system. "), bot not for others (such as voice games and interactive stories)?

Let me know what you think!

) is to entertain a toddler, and Alexa could work towards it by triggering the

) is to entertain a toddler, and Alexa could work towards it by triggering the