Roger Kibbe, developer evangelist for Bixby, just shared a highly interesting piece of content on Twitter that I wanted to put up for discussion here!

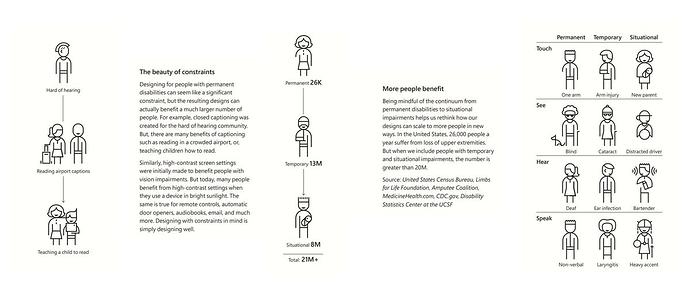

For your convenience, I’m posting the chart (AFAIK originally by Jen Gentleman) here:

What I found so interesting about this is the message that disabilities are not a binary state but stretch out on the entire scale from 0 to 100%, and that a lot of people have at least a situational disability at some point or another. This is also the gist of this humorous voice-themed clip:

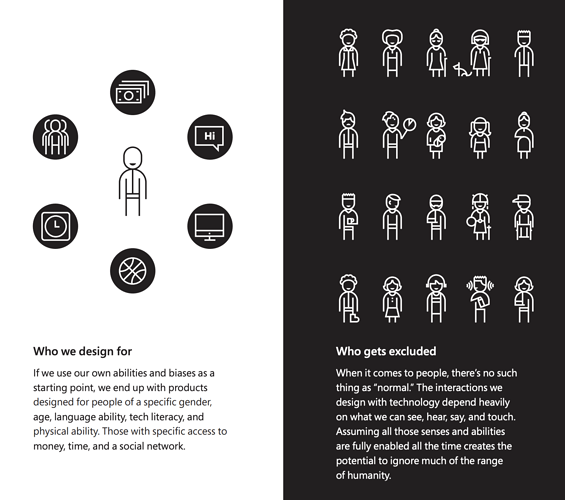

The question is: How can we as voice app designers and developers make voice apps more accessible? Some things that come to my mind are:

- Decrease the speed in TTS-generated texts (using the prosody SSML tag)

- Make voice apps multimodal (including touch input)

- Have a comprehensive language model that includes utterances in which various social groups would express the respective intent

- Don’t require the user to use complex utterances, maybe even stick with the simplest way of input (yes/no) where possible

Looking forward to hear your thoughts on this!