Hey all,

I wanted to bring up a topic that we’ve been discussing for a while: How to structure your i18n content in different keys.

We usually use nested objects like in this template:

"welcome": {

"speech": "Hello World! What's your name?",

"reprompt": "Please tell me your name."

},

And then access it in the code like this:

HelloWorldIntent() {

this.ask(this.t('welcome.speech'), this.t('welcome.reprompt'));

},

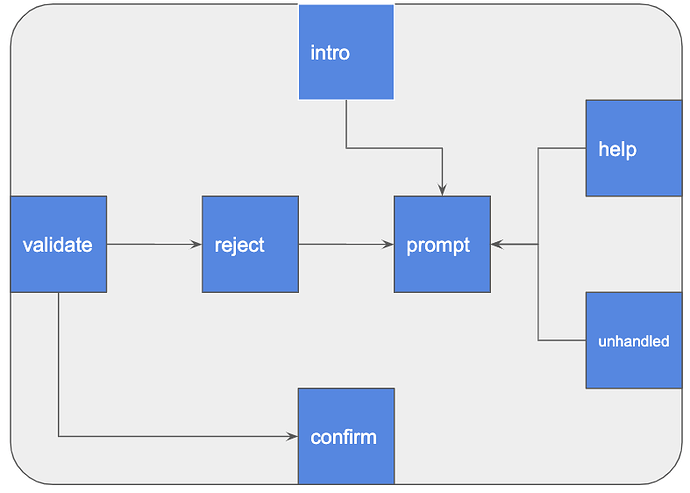

The more complex content structures we built, the more we thought about standardizing it more. Having everything in a speech element felt weird, e.g in cases where you want to repeat things.

The question is: How can we split parts of the speech up into structured keys?

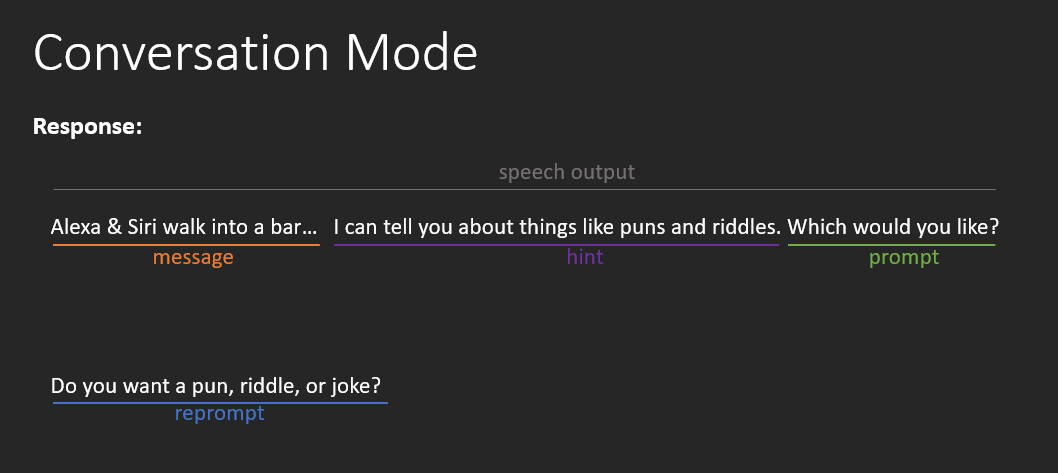

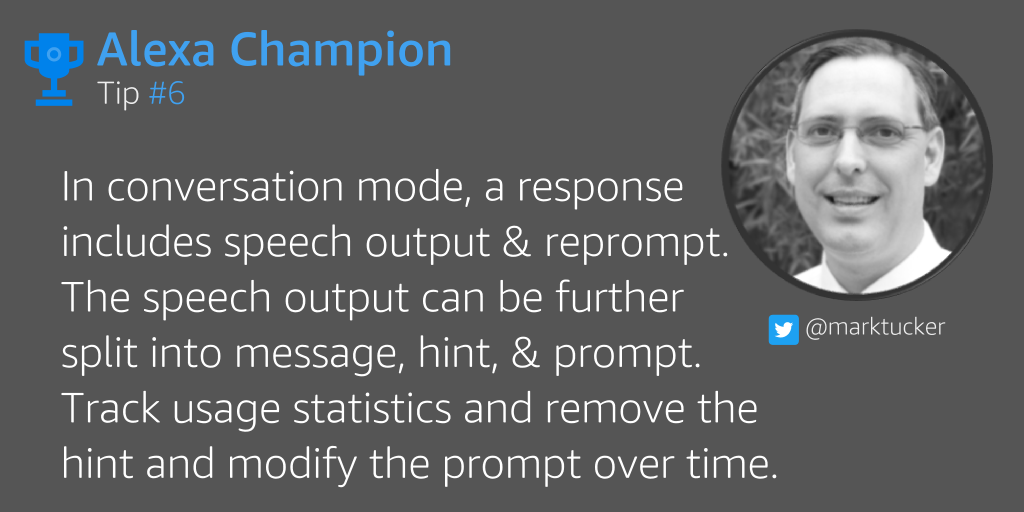

@marktucker shares interesting Alexa Skill development tips in a GitHub repository, and mentions several content types, including message, hint, and prompt for the speech:

He defines the different parts of the output in conversation mode like this:

This made me think if we could use something like this as a best practice:

"welcome": {

"message": "Hello World!",

"prompt": "What's your name?",

"reprompt": "Please tell me your name."

},

With the SpeechBuilder, we could then build it like this:

HelloWorldIntent() {

this.$speech.addT('welcome.message')

.addT('welcome.prompt');

this.$reprompt.addT('welcome.reprompt');

this.ask(this.$speech, this.$reprompt);

},

However, this feels a little redundant. So for people who want to use that structure, we might be able to do this (and grab the values in the background after validating that the key returns an object):

HelloWorldIntent() {

this.ask(this.t('welcome'));

},

This would free up some redundant code and allow content creators to add hints (and even rules for hints?) at the CMS level, making additional work on the code for changes like this unnecessary.

What do you think?

Conversational Components

Conversational Components