Take a Google Assistant action, with a single intent and a single Type collecting all text.

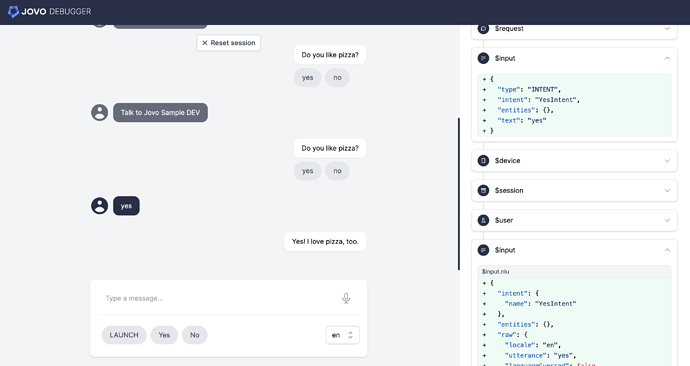

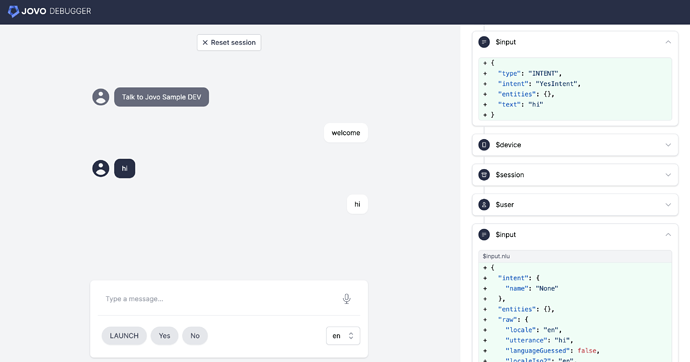

This is sending a Request of type INTENT and already contains an Intent and Entities properties.

So jovo interpretation module does not call nlpjsnlu.processText.

this.$input.entities contains the full utterance, the GA Type collecting all/any text.

this.$input does not contain a nluData property.

this.$input contains intent and entities from GA, not from nlpjs.

- Adding INTENT to supportingTypes does not call the nlpjsnlu

- Calling nlpjsnlu.processText directly fails.

- nlpjsnlu is not added to GoogleAssistantPlatform.plugins, it is {}, even when added in the config.

How can we call nlpjsnlu the parse the complete utterance phrase supplied by GA? and thus get a nluData property containing nlpjs intent and entities?

Never realised it might show I was doing something wrong

Never realised it might show I was doing something wrong